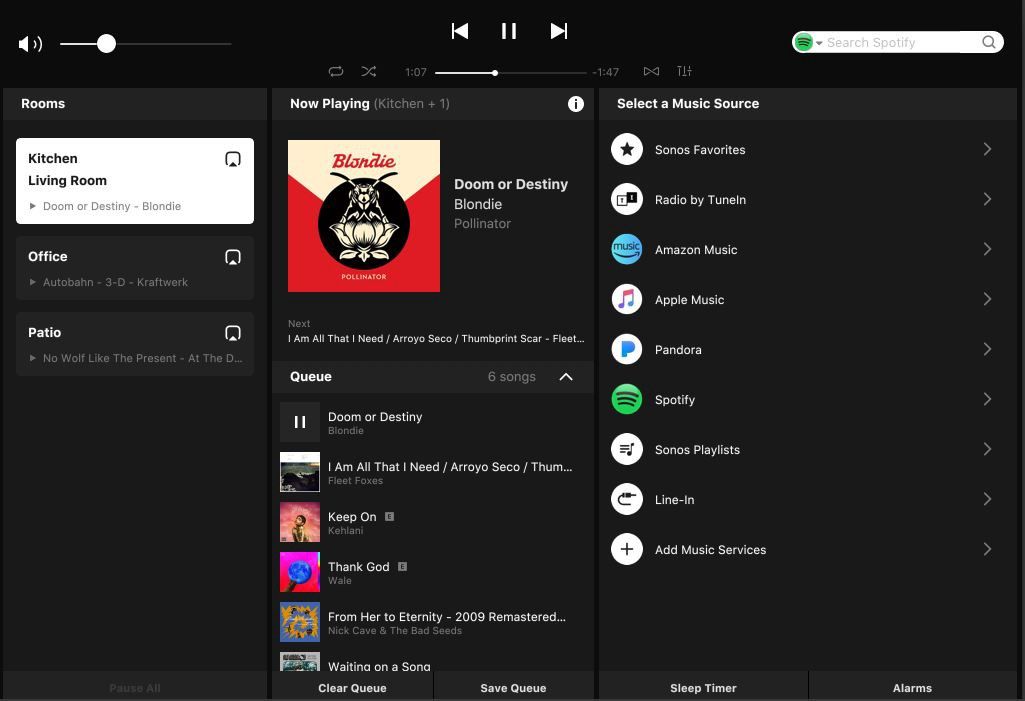

SONOS is a brand of connected speakers that allow users on the network listen to music that they can choose using the SONOS specific Android / iOS application, or 3d party services with monthly subscription like Deezer / Spotify / YouTube Music from their local network.

SONOS has been growing in popularity and user base last years and there is a high probability that you already used it. Parts of this success are that SONOS provide affordable, high quality, and easy to use products. You pull it out of the box, connect it to your network, setup it with your music provider and that's it !

Problem of using SONOS products, is the "music provider" as a requirement. As you probably already know it, using a music provider with your SONOS speaker is big part of the product experience. You can technically use your SONOS to play local music from your device, I found the experience not satisfying at all.

In old days you could also use your SONOS combined with an iOS device and their Airplay technology, that allowed you to stream whatever sound come from your device, YouTube videos included. Unfortunately, this is not available to Android / PC devices.

So, that take us to the subject of playing YouTube videos. Even if YouTube videos are publicly available and their content are free, SONOS can't play them without the use of YouTube Music which is not cool. YouTube is a big place for finding music, especially old live concert, or forgotten songs.

So, "How do we play YouTube videos on a SONOS ?".

In our path of digging into the SONOS system, we'll discover exotic audio format, interesting stream management techniques, and how we can abuse innocent features, "for fun and profit".

Reverse Engineering of SONOS desktop application.

As a start, I choose to study the SONOS desktop application in order to better understand the involved protocol and specifications for music switching, new radio, volume up / down, etc.

So I fire up Wireshark and filter by my SONOS ip address. First packets are pretty clear, SONOS is using SSDP for device discovery and UPNP for device control which are standard for these kind of device.

After some digging, I find a really interesting request that is linked to the Web-Radio feature of the device.

Indeed, part of the SONOS feature is that it can stream Web-Radios by choosing them on a list from the app, or add your own !

Here is the linked request:

This dump is a switch request to a previously added web-radio:

x-rincon-mp3radio://100radio-albi.ice.infomaniak.ch/100radio-albi-128.mp3

Note the custom URL protocol, that indicate that it try to play a MP3 Radio. But later tests will show that it works not only for MP3 but for quasi every supported encoding.

After these discoveries I try to google this prefix, in the hope of finding other ones that could be useful in our case. And found this post ( https://community.ezlo.com/t/sonos-plugin/169644/204) from 2012 of someone who extracted supported prefix of Web-Radios from a DLL on a (very) old version of the SONOS Application, here is it:

http-get::audio/mp3:

x-file-cifs::audio/mp3:

http-get::audio/mp4:

x-file-cifs::audio/mp4:

http-get::audio/mpeg:

x-file-cifs::audio/mpeg:

http-get::audio/mpegurl:

x-file-cifs::audio/mpegurl:

real.com-rhapsody-http-1-0::audio/mpegurl:

file::audio/mpegurl:

http-get::audio/mpeg3:

x-file-cifs::audio/mpeg3:

http-get::audio/wav:

x-file-cifs::audio/wav:

http-get::audio/wma:

x-file-cifs::audio/wma:

http-get::audio/x-ms-wma:

x-file-cifs::audio/x-ms-wma:

http-get::audio/aiff:

x-file-cifs::audio/aiff:

http-get::audio/flac:

x-file-cifs::audio/flac:

http-get::application/ogg:

x-file-cifs::application/ogg:

http-get::audio/audible:

x-file-cifs::audio/audible:

real.com-rhapsody-http-1-0::audio/x-ms-wma:

real.com-rhapsody-direct::audio/mp3:

sonos.com-mms::audio/x-ms-wma:

sonos.com-http::audio/mpeg3:

sonos.com-http::audio/mpeg:

sonos.com-http::audio/wma:

sonos.com-http::audio/mp4:

sonos.com-spotify::audio/x-spotify:

sonos.com-rtrecent::audio/x-sonos-recent:

real.com-rhapsody-http-1-0::audio/x-rhap-radio:

real.com-rhapsody-direct::audio/x-rhap-radio:

pandora.com-pndrradio::audio/x-pandora-radio:

pandora.com-pndrradio-http::audio/mpeg3:

sirius.com-sirradio::audio/x-sirius-radio:

x-rincon:::*

x-rincon-mp3radio:::*

x-rincon-playlist:::*

x-rincon-queue:::*

x-rincon-stream:::*

x-sonosapi-stream:::*

x-sonosapi-radio::audio/x-sonosapi-radio:

x-rincon-cpcontainer:::*

last.fm-radio::audio/x-lastfm-radio:

last.fm-radio-http::audio/mpeg3:

As we can see, SONOS is apparently able to play standard MP4. This should solve my problem as main encoder YouTube use is MP4. Unfortunatly, I wasn't able to make work any of them. Alright, rabbit hole, let's continue.

MP3 or not MP3, that is question.

From our discovery, we are able to stream arbitrary MP3 files on the SONOS, so we could technically build our first POC on a MP4 to MP3 file.

YouTube MP4 is stream oriented, which mean that part of the video and audio are sent over the time to build the cache (that's the white part of the video progress bar).

Finally, MP4 and MP3 are really different encoding. And building a stream oriented MP4 to MP3 is pretty hard (if not impossible). So one step would be a local download of the MP4 YouTube, then a conversion MP4 to MP3.

That's an incredibly slow and un-elegant solution, but hey ! It's a first step at least.

More discoveries on SONOS supported encoding.

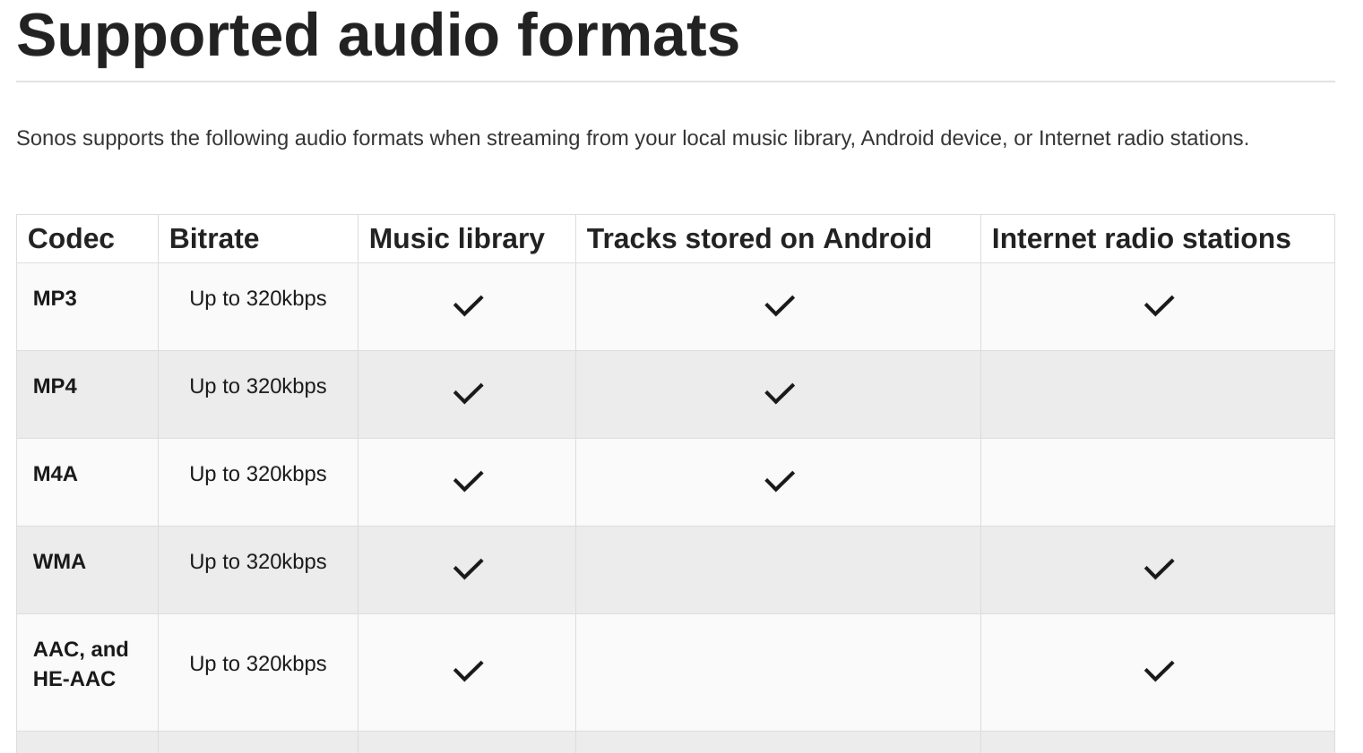

After a lot more research I found this documentation page about supported encoding:

This table say that SONOS support AAC. This is a very good news. In fact AAC is used to encode audio in MP4 video format. After a lot of tries, it seems that SONOS can't play raw AAC but need a streaming container protocol name ADTS.

So we could extract AAC part of MP4 convert it to ADTS and send it to the SONOS.

Actually at this point I've been able to play ADTS formatted AAC files on the SONOS using the previous discovered prefix "x-rincon-mp3radio".

New plan !

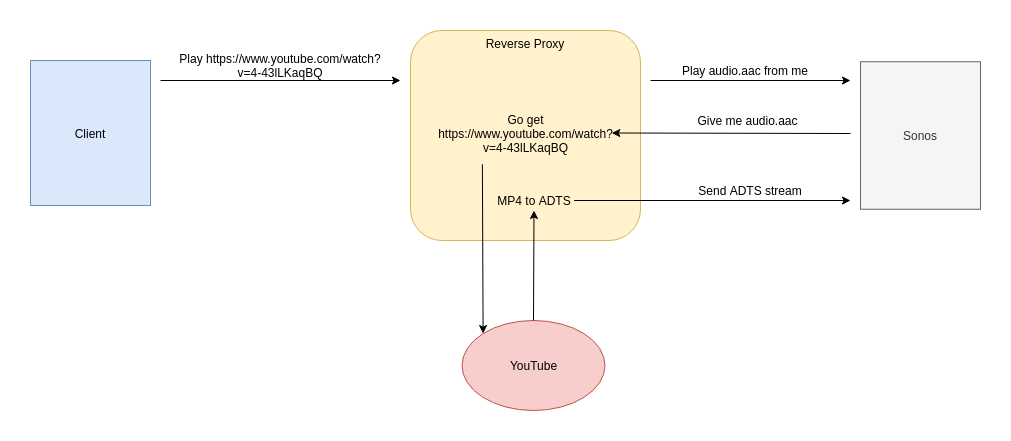

My new plan is to build a web server that serve ADTS formatted YouTube video content.

The SONOS ask a resource containing a YouTube video ID

Then the server ask to YouTube the corresponding video.

For each packet received:

- Parse MP4.

- Extract AAC.

- Format it to ADTS.

- Send it back to the SONOS.

Here is a schematic:

So in order, we first need a MP4 parsing implementation.

MP4 parsing.

But what is MP4 ?

This, is a hard question, cause you have to understand that the MP4 is not a free format, it has been developed in big part by Apple, and is documented in ( https://developer.apple.com/library/archive/documentation/QuickTime/QTFF/QTFFPreface/qtffPreface.html).

Here is a quick resume:

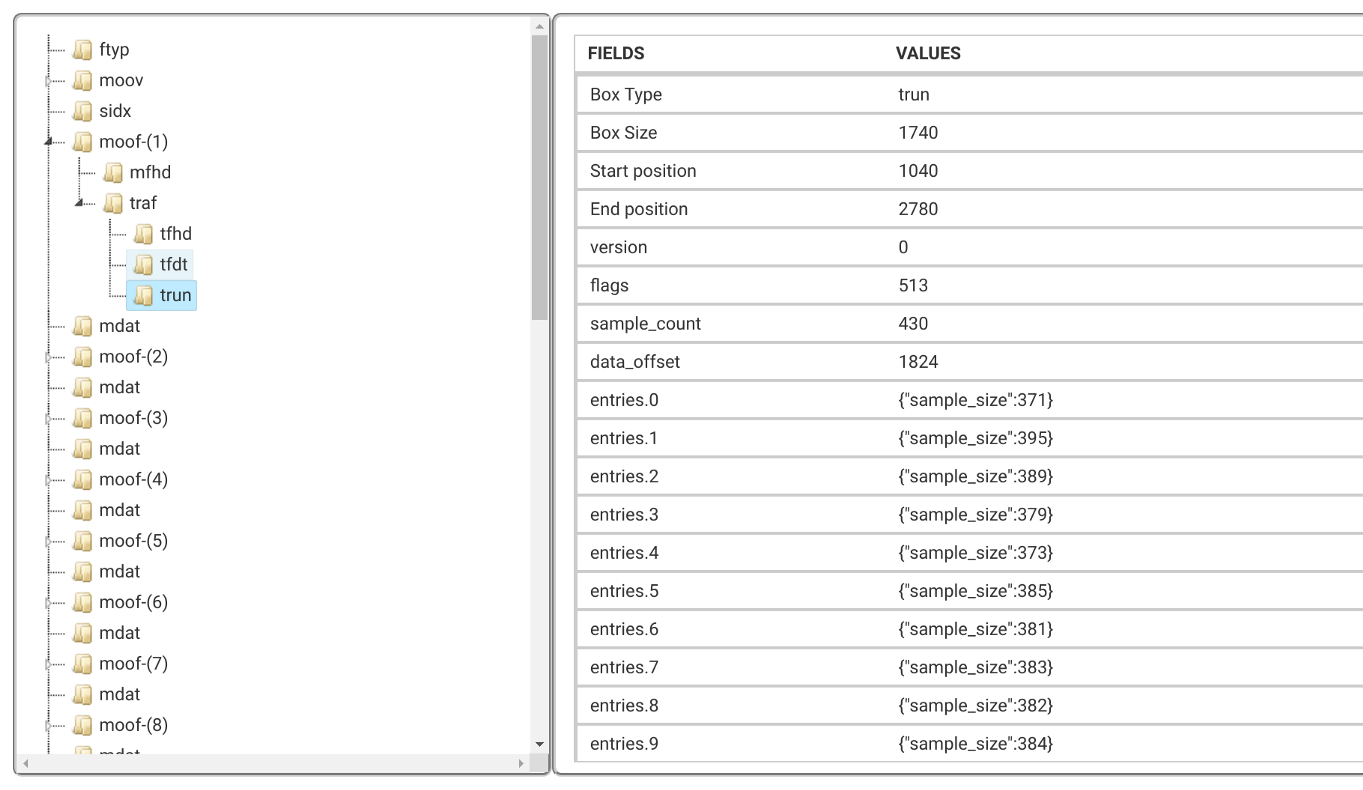

MP4 is a container format, that mean, it's like the bottle, but it don't care of the water in it. So you can store audio, video, metadata, copyrights, in the same format.

It is divided in different chunks, called atoms. An atom can also contain atoms, so it is recursive. Every atom has a size, and a name, and a content, among these names: mdat, moov, moof, etc.. And each name define the content of the atom, for example moof and moov is for metadatas and mdat is for audio.

I'm going to call the order of these atoms the structure, for example, here is the structure of a YouTube video:

This structure of MP4 is typical of a streamed content, ftyp is mandatory as the first atom. And then each metadata atom ( moov, moof), describe the next content atom. That's how the YouTube player doesn't have to wait for the video file to be downloaded entirely. After the first atom, he knows everything it need. And they it wait for atoms, and at each pairs of metadatas, content atoms and add them to the buffer.

That's why our code will be stream oriented, we will use same technique as the YouTube player, our code will format AAC to ADTS by working with pair of atoms. This greatly reduce loading time, and calculation load on our server.

Stream oriented mean that it use read method to get each bytes we need.

The interest of this it that we can provide in input a file stream instance or a socket stream and using the same function (very useful for tests).

MP4 header parsing:

To get general information about the file, we need to get the first atom called ftyp. In order to do this I created a class, his mean is to be created at the parsing to store result, and return it.

And a parsing function:

It get the length of the atom, then the body of n-length, get the two 4 bytes long strings, at [:4] and [4:8], store them then return.

MP4 atoms parsing:

So first we have to get all atoms separately. So to store them, I created an Atom class, and a parsing function.

Here is the parsing part:

First it get first 4 bytes, and cast them as 32 bit integer, this is the atom size. Then it take the atom body. And in the atom body it take the first 4 bytes and decode them as a string. It's the atom type. Finally it return everything as an object storing the parsed atom.

Why two separate class and function to do roughly the same thing ?

It's just my way of ordering my code, it make it more understandable for the next. Like you have GetHeader first then a loop of GetNextAtomStream. I think it's clean.

Mdat & trun atom parsing:

These atoms are containing audio frames for mdat, and trun atom is containing the AAC frame length (as 32 bit integer). Which is important to build an ADTS stream. Here is the implementation for trun:

And here is implementation for mdat, it take the TrunAtom object in parameter, to slice each frames independently.

Main loop:

This is the first version of the main loop, it just parse the MP4 atoms, then loop into AAC frames from mdat (built from trun, as explained before). That's here that we gonna put our ADTS packet building implementation. It also display some information about other atoms.

ADTS formatting.

The ADTS (Audio Data Transport Stream) is a AAC streaming container format / protocol that is not so used nowadays. Documentation about it is pretty hard to find. File or source using it are using the extension .aac and the mime type audio/aac.

It is pretty low-level because it is using a header - body structure. So it first send a header about 7–9 bytes long, which is using bit-packing (so very efficient and light), which provide information on the body like the size of the frame count. Then it send directly the body. And at the last body byte, the server is waiting again for a header, then a body, then a header, etc…

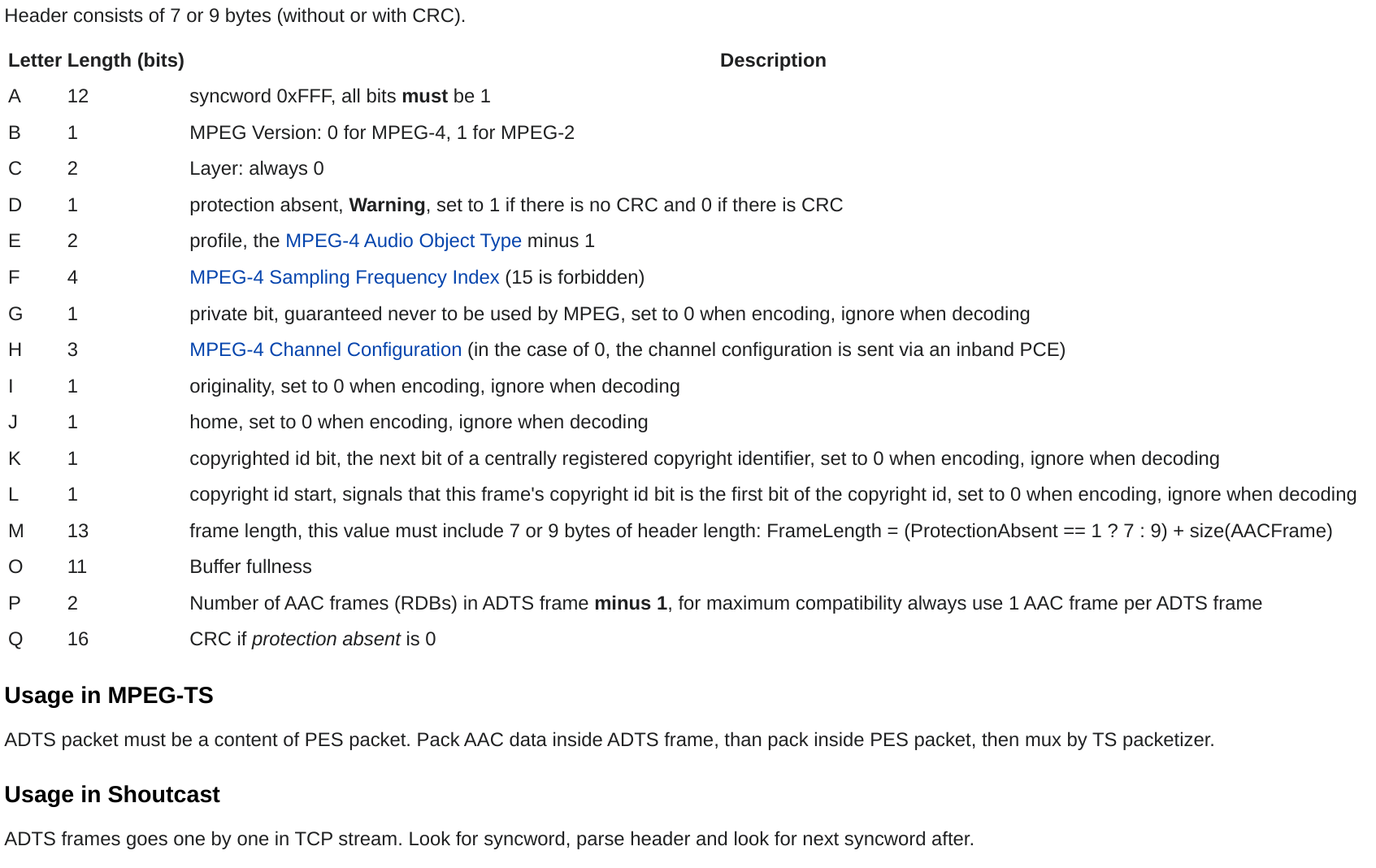

Here is the header format (each letter is a bit, each letter group is a byte):

AAAAAAAA AAAABCCD EEFFFFGH HHIJKLMM MMMMMMMM MMMOOOOO OOOOOOPP (QQQQQQQQ QQQQQQQQ)Extracted from the only documentation I found: https://wiki.multimedia.cx/index.php/ADTS

For example, here is an ADTS header in binary:

11111111 11110001 01010000 100000 0000100000000 11111 11111100A simple implementation of that would be to use the above header as a template, and just change the bits representing the size of the body.

Let's add few lines to our main loop with a yield to make it usable as a generator.

I know, i know, the bitarray part is one of the ugliest piece of code you ever saw. (Must be a constant in Python…)

But it work ! So it gonna do the job for few tests.

So, now we have a running core, that parse streamed MP4 parsed and convert it to ADTS.

SONOS Client & Server implementation.

What we're going to do now, is to build the part that request the SONOS to play an AAC source from our server, and the server himself.

My Implementation will use Python with Flask.

We need now to implement a YouTube video request, to get the stream that we're going to provide to our main loop.

Get the YouTube stream

We're going to use a cool little package called pytube ( https://pypi.org/project/pytube/6.4.2/).

If you want to try it, please install the github version, it seems that a lot of releases are getting broke, I guess due to YouTube changes.

This library can do neat stuff like listing available streams from a YouTube URL, getting the title, description, etc.

What really interest us, is the feature to download a video. ( https://python-pytube.readthedocs.io/en/latest/user/quickstart.html#downloading-a-video)

Unfortunately, it download the video to a location on the filesystem, instead of returning a stream. We just need to make few modifications from the original method ( https://github.com/nficano/pytube/blob/master/pytube/streams.py#L202).

With it, I'm implementing GetStreams and GetYoutubeVideoInfos functions.

Really simple.

So now, we can do a Flask program that get a YouTube video ID on a endpoint, get the stream from it then send the ADTS back.

Here is it:

Please Sonos, play my song.

So let's deal with this Sonos.

As shown in the first part of this project, we have a request that play a AAC source, we just need to replace the x-rincon-mp3radio://100radio-albi.ice.infomaniak.ch/100radio-albi-128.mp3 part with our server address, in my case 192.168.1.16:5000, and then add our endpoint with the video ID we want to play.

Save it to request.txt, then:

cat request.txt | nc 192.168.1.18 3000

Enjoy your music !

This poc will soon become a full featured a Android / iOS application. I'll keep you updated on this.

Thanks, you very much for reading my article.